Girish Gupta

/ˈɡɪ-rɪʃ ɡʊp-tə/ | गिरिश गुप्ता | غِرِشْ غُبْتَة

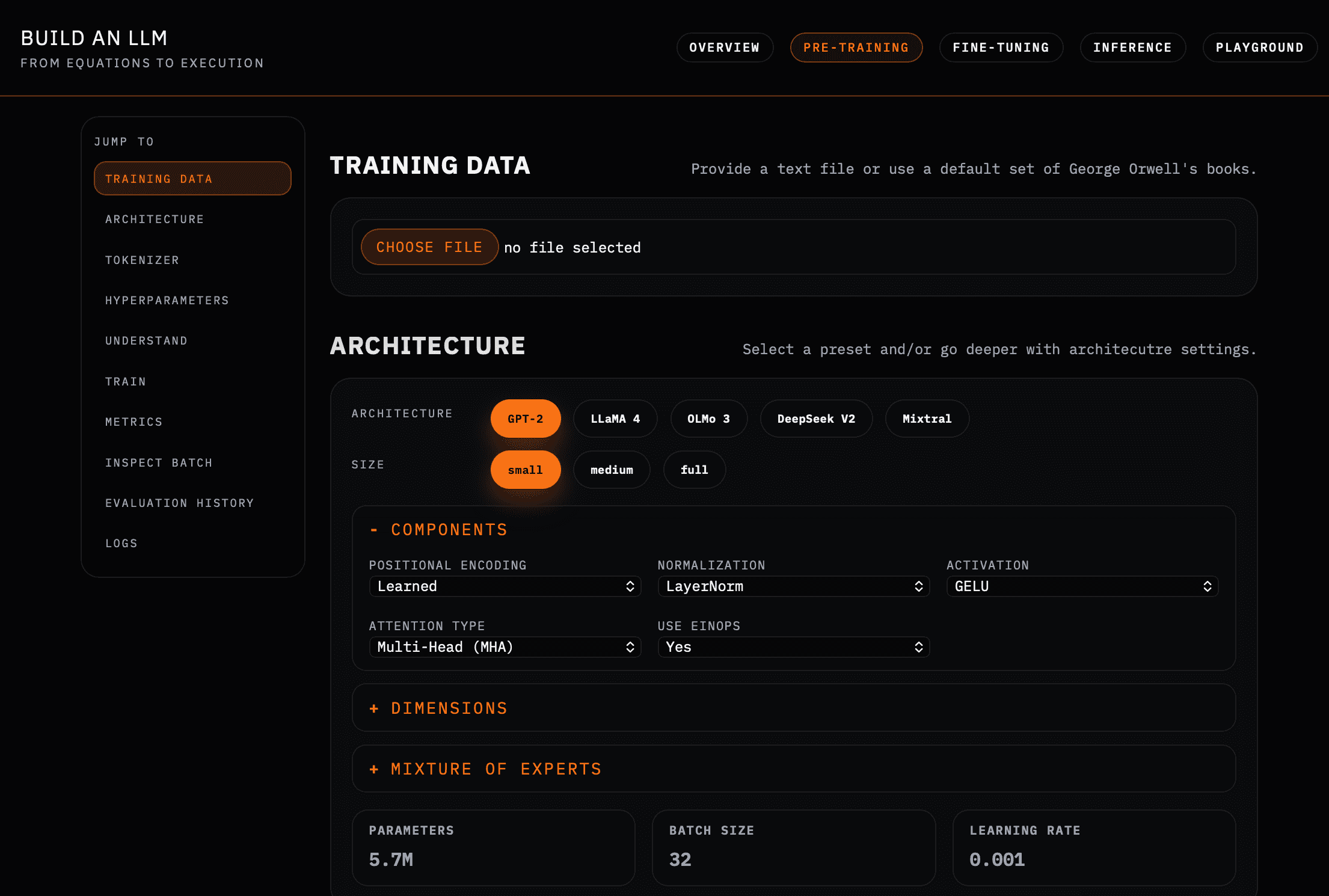

Build an LLM

Build and train a large language model from scratch at home.

Beyond the Parrot

Sign up to my newsletter on modern AI: Anatomy, agency, and a world beyond the stochastic parrot.

Always Go

My memoir charting a decade of reporting on global crises, from Venezuela to Iraq, for the world's most prestigious news outlets—and a sharp critique of the news industry.

I'm an AI interpretability researcher working to understand how artificial intelligence models actually work. I believe mechanistic interpretability is essential for AI safety—making it one of the most important scientific challenges of our time.

My work draws on an unusual career path: theoretical physics (my academic foundation), investigative journalism (a decade reporting on global crises), and Silicon Valley engineering (building AI systems across multiple industries).

As an engineer and tech leader, I built AI infrastructure and software across news, public policy, human rights investigations, accounting, and healthcare, as well as software to bring simple, live economic data to people suffering hyperinflation. I've seen firsthand how shortcuts and misunderstandings can lead to serious real-world consequences.

Before entering tech, I reported on global crises—primarily Venezuela, where I lived for nearly a decade, as well as Iraq, Afghanistan, Colombia, Cuba, Mexico, Lebanon, Jordan, Guyana, Brazil, and Egypt—for the New Yorker, New York Times, Reuters, Time Magazine, the BBC, NPR, and many others. This led me to write a memoir, Always Go, a sharp critique of a news industry shaped often similarly to AI by reward hacking and misaligned incentives.

In my spare time, I enjoy photography, learning history, and playing the piano. I live in San Francisco with my wife and children.

Please email or message me if you'd like to chat!